.png)

SDSC’s COO, Andrew Porter, and Head of Machine Learning, Margaux Masson-Forsythe, recently attended the Society of American Gastrointestinal and Endoscopic Surgeons (SAGES) Next Big Thing Innovation Weekend in Houston, Texas. There, Margaux presented SDSC’s pioneering work, moderated a panel, and strengthened connections within the surgical research community, furthering SDSC’s mission to advance data-driven surgical excellence.

The SAGES NBT Innovation Weekend served as a hub for both industry leaders and clinicians to openly discuss innovations in surgical technology and practices. SAGES’ mission – “to innovate, educate, and collaborate to improve patient care” – was at the forefront of the discussions, which centered on the next 5-10 years of surgical advancements. A key theme was facilitating cross-adoption of techniques from one specialty to another, with particular focus on advancing technologies for minimally invasive gastrointestinal and endoscopic surgery.

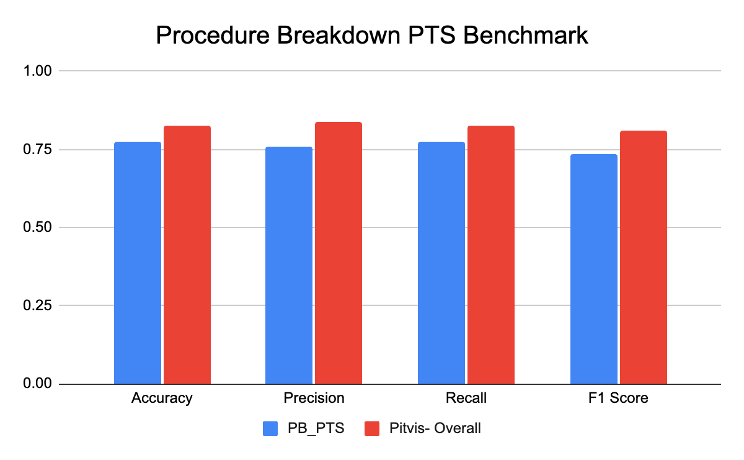

On the first morning of the conference, to a packed house, Margaux delivered a compelling presentation on “Enhancing Task Assessment Using Video Analytics”, where she showcased SDSC’s latest progress on models that can decide whether surgical tasks (e.g. suturing) were done well or not. While this work is in its early stages, and not yet integrated into the Surgical Video Platform (SVP), it represents a critical step in developing ML pipelines that provide actionable feedback, rather than a simple efficiency score. If we can create an AI model that answers “yes” or “no” to questions pertaining to surgical competency, we could produce a checklist of surgical tasks that acts as a skill evaluation for surgeons or residents in training.

By repurposing some of our current ML models, like surgical tool detection and phase segmentation, SDSC designed the task assessment pipeline to evaluate questions regarding surgical performance. Given a surgical video input, the pipeline extracts frame-based features (such as tool detection, frame features, and tissue segmentation) and temporal features (such as full video temporal analysis, clip-based features, and optical flow). These extractors are then analyzed by a router, which determines the appropriate “expert” models to evaluate the task at hand.

For instance, the system can assess whether a resident successfully performed an intracorporeal knot using a surgeon’s knot, followed by two additional throws. The router, leveraging a mixture of specialized “experts” – including instrument tracking, action recognition, anatomy analysis, and procedure outcome models – will determine whether a task was completed correctly. The final output is either a “yes” or “no” answer to the embedded question, an objective assessment of surgical performance that provides valuable insights into skill development and procedural proficiency.

A recurring theme throughout the conference was the challenge of ensuring AI models are trained on high-quality, representative data. As Margaux highlighted in her TEDx Talk, the effectiveness of AI in surgery is only as strong as the datasets that power it. Despite the gold mine of intraoperative data stored in hospital systems and surgical device companies, much of it remains untapped – an oversight SDSC is actively working to address. Unlocking this data has the potential to fuel research, refine algorithms and drive better patient outcomes on a global scale.

SDSC’s work on AI-powered surgical task assessment is poised to revolutionize the field. Imagine a system where real-time intraoperative feedback could help refine a surgeon’s technique, enhancing both safety and efficiency. With SVP’s procedure breakdown technology, procedures can be broken down into distinct phases, enabling focused analysis and targeted skill development. This type of intelligent video analytics could redefine surgical education and quality control, creating a structured, data-driven approach to skill refinement.

Beyond her presentation, Margaux also moderated the session “Agents for Efficiency and Simulation Improvement”. This session brought together leading experts to discuss the technical challenges of integrating AI-driven computer vision into clinical practice. Speakers explored a range of AI applications in surgery, such as real-time computer vision for hospital efficiency, AI operational assistants in handling non-clinical tasks, and the broader real-world impact of AI in the operating room (OR). The session concluded with a dynamic panel discussion, where experts debated the evolving role of AI in surgery and the key challenges that must be addressed for widespread adoption.

SDSC recognizes that collaboration is critical to moving the field forward, and we are seeking general surgeons to collaborate on AI-driven surgical research as we expand into new procedure types. By bringing together surgeons, technologists and researchers, SDSC is bridging the gap between AI development and real-world surgical application. The insights gained at SAGES will help guide the next phases of our work, ensuring that AI-powered solutions continue to be clinically relevant, surgeon-driven, and positioned for real-world impact.