As a surgeon or medical resident, you're no stranger to the rapid advancements in medical technology. From robotic-assisted procedures to real-time imaging, the healthcare industry has embraced the power of innovation. However, one area that has largely been overlooked is the analysis of video footage recorded during surgery. SDSC is working to change that.

Machine Learning / Computer Vision (ML/CV), a powerful subfield of Artificial Intelligence (AI), is set to revolutionize the way we approach surgical video analysis. ML/CV is unlike the more widely-known generative AI (genAI) models that excel at creating novel content, such as generating images or writing text. ML/CV models are specifically engineered to process, interpret, and understand existing visual data, such as video footage. While some genAI architectures have demonstrated image analysis capabilities, ML/CV remains uniquely focused on advanced visual understanding.

It’s important to note what we mean by “surgical video”. We don’t analyze videos taken by a camera pointing down at a patient from the ceiling of an operating room. We look at footage from endoscopic cameras (tiny cameras at the end of a long, flexible tube) or microscope cameras, both of which are used to see inside the patient’s body and perform surgery via a video screen. We want our ML/CV models to see exactly what the surgeon saw while performing the operation, which eliminates a whole category of potential error in analysis.

At SDSC, we're at the forefront of harnessing the full potential of ML/CV for surgical video analysis. Our cutting-edge models can identify specific tools such as curettes, graspers, suction devices, and dissectors, meticulously following their movements and usage throughout an entire procedure. But our capabilities go beyond just tool tracking - our models are also adept at recognizing the different phases of surgical procedures from what is happening on screen. This level of granular, data-driven insight empowers surgeons with unprecedented visibility into their own workflows and techniques.

In addition, we're actively exploring how to judiciously incorporate select genAI capabilities to further enhance our solutions. By selectively blending the strengths of both ML/CV and genAI, we aim to provide our users with the most comprehensive, stable, and clinically-relevant features possible.

To ensure our analysis is meaningful and relevant, we've collaborated extensively with a community of surgical experts. Together, we've defined standardized names and classifications for operating room tools and surgical phases, aligning our models with established practice standards and consensus publications.

“The insights don't come from the model, the model produces the data that we use to derive the insights. [...] answering the question: what does this mean? It isn't part of the model itself, it comes from humans and experts who design the analysis” - Dr. Dhiraj Pangal, Neurosurgery Resident, Stanford University

By precisely tracking the usage of surgical tools and the timing of different procedural steps, our platform generates a wealth of data-driven analytics. These insights can help you identify areas for improvement, optimize your techniques, and ultimately enhance patient outcomes. For instance, our models might detect unusual tool usage patterns, potentially indicating a need for additional training or even that you have discovered a more efficient approach.

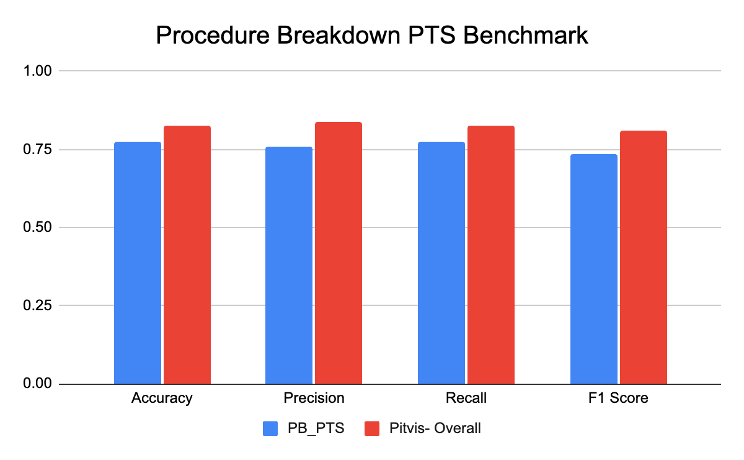

To validate the accuracy and reliability of our analytics, we employ a rigorous process. We keep a whole subset of surgical procedures completely separate from our model training data, reserving this evaluation data to assess the performance of our CV models. By comparing the model's predictions on this data it has never seen before to the ground truth annotations provided by human surgical experts, we can accurately measure the models' accuracy. This ensures our CV models meet the highest standards for real-world application.

Furthermore, we have implemented a robust model monitoring system that continuously evaluates the performance of our CV models on the surgical videos uploaded by our users. If the system detects any indication of potential poor performance, it automatically flags this to our team of AI experts for further investigation and refinement of the models. This allows us to stay agile and responsive, continuously improving our analytics to best serve the evolving needs of surgeons like yourself.

Our commitment to excellence doesn't stop there. We remain responsive to your evolving needs. We actively solicit feedback from surgeons using our platform, incorporating your insights to refine both our models and the overall user experience. This continuous feedback loop allows us to provide you with the most valuable and impactful analytics possible.

If you're a surgeon or medical resident interested in leveraging the power of computer vision, we invite you to join our platform and engage with our team. Together, we can unlock new levels of surgical performance and drive research and innovation that benefit patients and the entire surgical ecosystem.